Disclaimer

The following example uses the beta Azure CLI for now. The CLI change is not yet available in the stable release of Azure CLI.

When deploying from Github Actions to Azure you have to Login to Azure with the azure/login action. This action requires a Service Principal secret which can be stored in Github secrets. However, these secrets are available in Github workflow using them and can even be written to the output with echo for example.

With the new azure/login@v1.4.0 action you can use Federated credentials to login to Azure. Because this feature essentially establishes a trust between Github and Azure Active Directory there is no need for a password/secret anymore.

Create App registration and Federated Credential in Azure

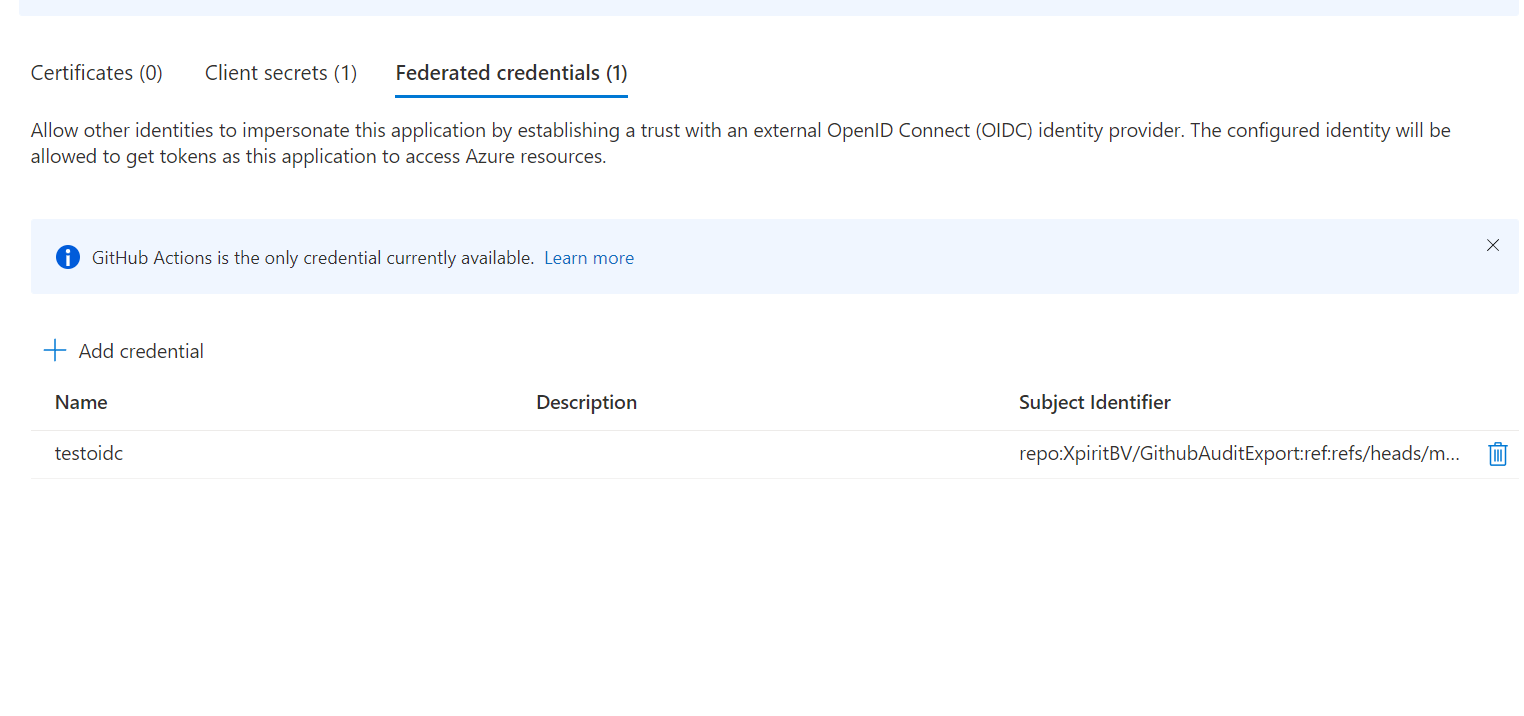

First start by creating a normal AppRegistration in Azure Active Directory. After that go to Certificates & secrets and then to the tab Federated credentials.

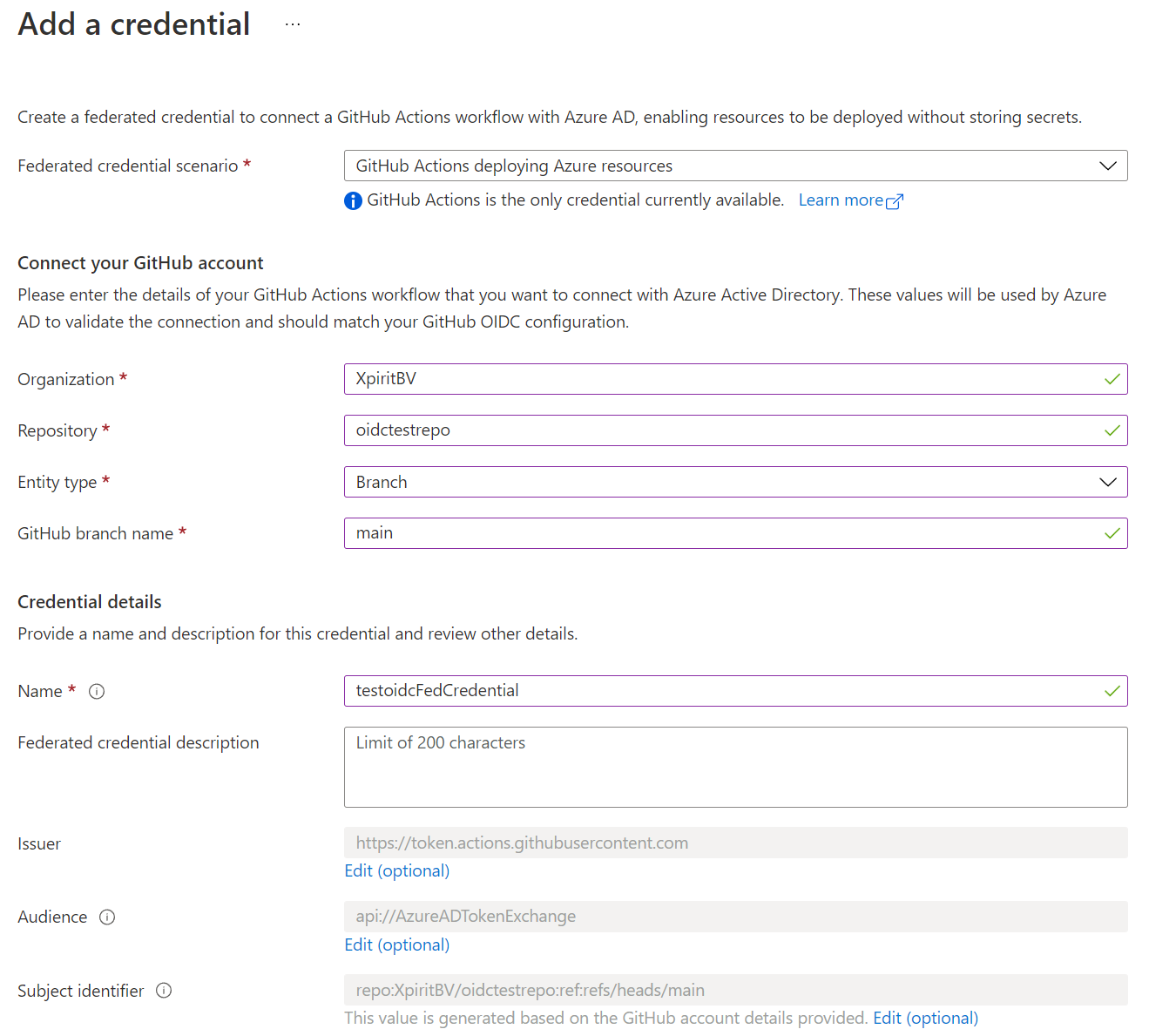

Create a Federated Credential in Azure Active Directory. This is the credential that will be used to login to Azure. Fill in the correct values for your Github Repository.

Modify Github workflow to use Federated Credentials to login on Azure

After the first step of creating the AppRegistration and Federated Credential you can now modify the Github workflow to use the federated credentials.

A example of the modified workflow is shown below.

1 | name: deploy |

The important part is the change to the azure/login@v1.4.0 action. Instead of providing a client secret it is now possible to use the federated credentials. The only information that is needed is the client id, tenant id and subscription id. Because of the trust between Github and Azure Active Directory there is no need for a password/secret anymore.

This sample uses the beta version of the Azure CLI. To install it please visit Azure CLI Beta.

.

.

.

.